Explore the Top AI Models: A Comprehensive Explanation

In today’s diverse landscape of artificial intelligence models, each with its specialization across different domains, distinguishing the top models in various areas poses a significant challenge.

A novel methodology for ranking these AI models has been introduced, focusing on their propensity for ‘hallucinations’ – occurrences where an AI produces incorrect or nonsensical information.

The task of identifying the “best” AI model is complex, hindered by the challenge of defining explicit evaluation criteria. The nature and structure of the data used to train these models play a crucial role in influencing their outputs.

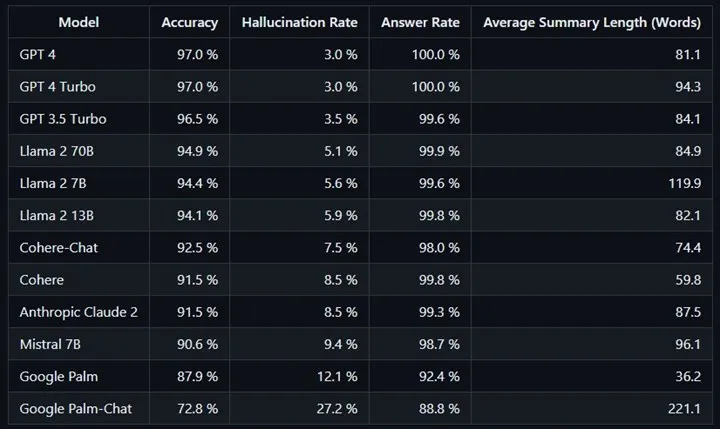

Given these considerations, assessing these tools’ effectiveness through the precision of their outputs emerges as the most viable approach. To facilitate this, Vectara has unveiled an AI hallucination chart.

This chart categorizes leading AI chatbots based on their proficiency in minimizing hallucinations, offering a distinctive insight into the performance of these AI models.

The best AI models

- GPT-4: The fourth iteration of OpenAI’s Generative Pre-trained Transformer (GPT) series, which represents advancements in natural language understanding and generation. GPT-4 likely incorporates improvements in model architecture, training methods, and performance compared to its predecessor, GPT-3.5.

- GPT-3.5: An intermediate version of the GPT series, sitting between GPT-3 and GPT-4. GPT-3.5 likely introduced incremental enhancements over GPT-3, such as fine-tuning model parameters, refining training data, or optimizing computational techniques.

- Llama 2 70B: Refers to a second version of the Llama language model with a significant parameter size of 70 billion. Llama models are developed for advanced natural language understanding tasks, leveraging large-scale neural networks to capture complex linguistic patterns and semantics.

- Llama 2 7B: Another version of the Llama language model, but with a smaller parameter size of 7 billion. While less massive than Llama 2 70B, this model is still capable of handling various language processing tasks with considerable accuracy and efficiency.

- Llama 2 13B: Yet another version of the Llama language model, featuring 13 billion parameters. This model likely strikes a balance between computational efficiency and performance, making it suitable for a wide range of applications in natural language processing.

- Cohere-Chat: A conversational AI model developed by Cohere, specialized in generating human-like responses in chatbot interactions. Cohere-Chat employs advanced language generation techniques to produce coherent and contextually relevant dialogue, enhancing user engagement and satisfaction.

- Cohere: Likely a suite of natural language processing models and tools created by Cohere, offering a comprehensive solution for various language understanding and generation tasks. Cohere’s technology may include capabilities such as text summarization, sentiment analysis, and language translation.

- Anthropic Calude 2: An updated version of the Anthropic language model developed by Anthropic, incorporating advancements in AI research and machine learning techniques. Anthropic Calude 2 is likely designed to excel in tasks requiring deep contextual understanding and nuanced language generation.

- Mistral 7B: Presumably, a language model named Mistral with a parameter size of 7 billion. Mistral models are engineered for a wide range of natural language processing tasks, including text generation, question answering, and document summarization.

- Google Palm: An AI model developed by Google, possibly optimized for deployment on mobile or handheld devices. Google Palm may feature compact architecture and efficient computational characteristics, making it suitable for resource-constrained environments while maintaining high performance.

- Google Palm-Chat: A variant of the Google Palm model tailored specifically for conversational applications, such as chatbots and virtual assistants. Google Palm-Chat likely emphasizes natural and fluid dialogue generation, enabling engaging interactions with users across various conversational scenarios.

“Hallucination” is a common occurrence among AI models, where they generate fabricated facts or details to fill gaps in information or context. These fabrications can be seamlessly integrated, potentially misleading unwary observers.

In the ranking of AI models based on their tendency for hallucination, two prominent language models owned by Google find themselves at the bottom, indicating their comparatively inferior performance in this regard.

Notably, Google’s Palm Ct. model emerges as the least reliable, exhibiting a hallucination rate exceeding 27% on the test material. Vectara’s evaluation suggests that Palm-Chat responses are notably rife with hallucinatory content, highlighting a significant hurdle in ensuring the reliability of AI-generated information.

You may also like this content

- How Advanced Is Your AI Expertise?

- Can Artificial Intelligence Recognize You?

- Did a Human or AI Write This Sentence?

Follow us on TWITTER (X) and be instantly informed about the latest developments…