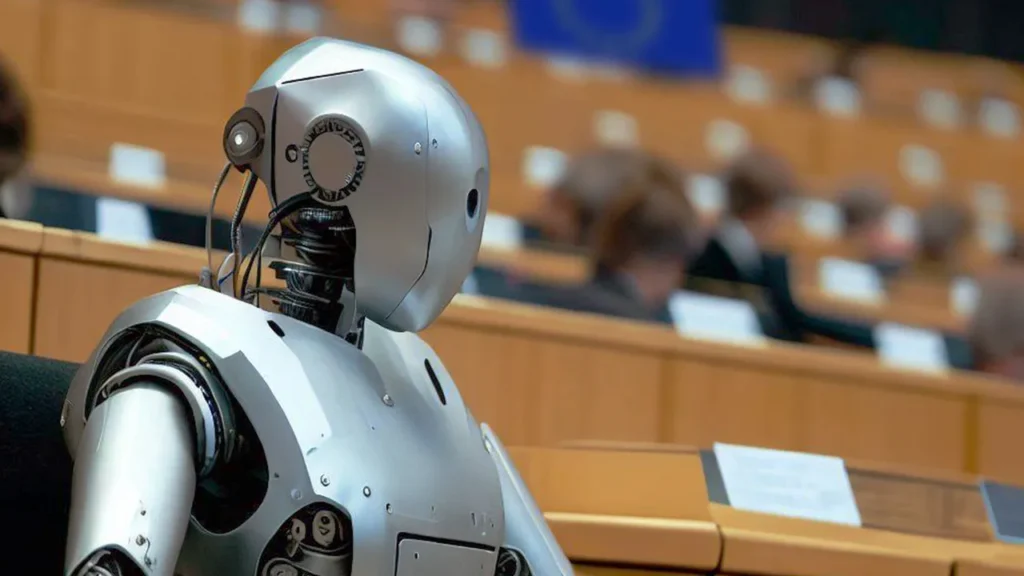

The artificial intelligence law, which was voted on last March and is expected to enter into force soon, has received final approval from the Council of the European Union. As part of this groundbreaking regulation, a new committee and office will be established.

The law will restrict the use of artificial intelligence in areas such as biometrics, facial recognition, education, and employment. Developers using AI will need to meet risk and quality management obligations to gain access to the EU market.

What prohibitions does the AI law cover?

The law, which is the first of its kind in the world, will address the issue in four different categories: minimum risk, limited risk, high risk, and unacceptable risk. Most AI tools will initially face only limited transparency requirements. However, tools classified in higher risk categories will face stricter regulations.

For example, applications such as chatbots will be considered to pose limited risk and will be subject to lighter obligations. On the other hand, activities such as extracting sensitive data, including emotion recognition, social scoring, sexual orientation, or religious beliefs, will fall under the unacceptable risk category.

The Council of the European Union’s approval will be published in the official journal in the coming days. The law, being the first of its kind, will come into force across the EU 20 days after publication. According to EU officials, this law represents an important milestone for the European Union.

The law will play a key role in determining the requirements for app developers. Additionally, regulatory boards will be established to support the development and real-world testing of innovative applications.

You may also like this content

- Meta Building World’s Fastest AI Supercomputer for Metaverse

- Artificial Intelligence Will Make Decisions Instead Of People

- Bill Gates: Artificial Intelligence Over Web3 and Metaverse