Privacy is Dead? Meta Wants Its Smart Glasses to Scan Your Face

Do you remember that episode of Black Mirror where everyone could see everyone else’s social credit score and personal history just by looking at them? Or maybe you recall the futuristic vision of Minority Report, where ads scanned your retina as you walked by?

For years, we treated those scenes as dystopian science fiction. Today, I am looking at a leaked document from Meta that suggests we are about to live in that reality.

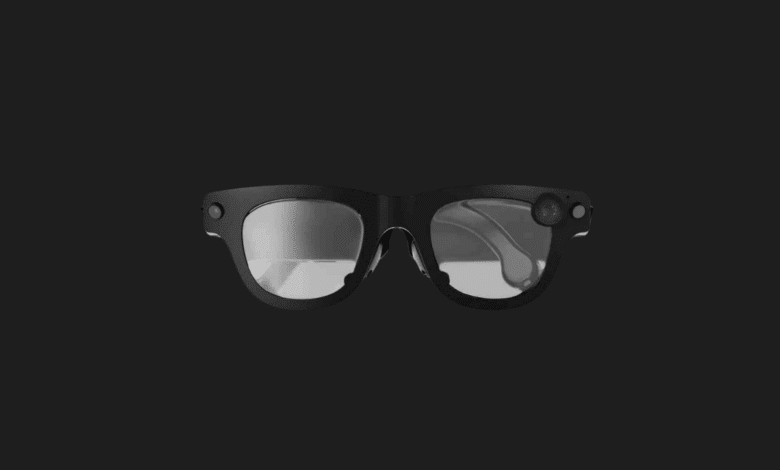

I have been a fan of the Ray-Ban Meta smart glasses. The hardware is sleek, the audio is great, and the ability to capture POV video is undeniably cool. But a recent report has uncovered a plan that makes the hair on the back of my neck stand up.

Meta is reportedly planning to bring Facial Recognition to these glasses. And the way they are planning to do it—and when they are planning to launch it—is deeply controversial.

The “Name Tag” Project: A Wolf in Sheep’s Clothing?

According to internal documents reviewed by The New York Times, Meta has been working on a feature internally called “Name Tag.”

Here is the pitch: You are wearing your smart glasses. You look at someone. The glasses use their cameras to scan the face, run it through Meta’s massive AI database, and whisper the person’s name or show you their profile.

Originally, Meta considered marketing this as a benevolent tool. The idea was to frame it as an accessibility feature for the visually impaired or those with face blindness (prosopagnosia). While that is a noble use case, let’s be real—that is also the perfect “Trojan Horse” to get the public to accept invasive surveillance technology.

The Cynical Launch Strategy

What really frustrated me while reading about this leak wasn’t just the technology itself, but the strategy behind the launch.

The document explicitly suggests that Meta should wait for a “busy political landscape” to release this feature.

- Translation: They want to drop this bombshell when the media, regulators, and privacy watchdogs are distracted by elections, wars, or scandals.

- They know this will cause a backlash. They are banking on the fact that we’ll be too busy arguing about politics to notice our privacy evaporating.

How It Works: Who Can Be Scanned?

You might be thinking, “Ugu, surely they can’t just scan random strangers on the street, right?”

Well, yes and no. The current plan, according to the leaks, isn’t a total “God Mode” (yet). The system is designed to identify:

- Your Existing Connections: People you are already friends with on Facebook or follow on Instagram.

- Public Profiles: This is the kicker. Even if you don’t know the person, if their profile is set to “Public” on Instagram or Facebook, the glasses could theoretically pull up their data.

Imagine walking into a bar. You scan the room. Your glasses tell you the names of three people you’ve never met, simply because their Instagram isn’t private. That is a fundamental shift in how human interaction works. Anonymity in public spaces is effectively dead.

A History of Flip-Flopping

If you have been following Meta (formerly Facebook) as long as I have, you’ll remember that they have a complicated relationship with faces.

- 2017: Facebook aggressively pushed facial recognition to “tag” you in photos automatically.

- 2021: Following massive privacy outcry and lawsuits, they shut down the system and deleted over a billion face templates. Mark Zuckerberg basically said, “We hear you, privacy matters.”

Now, just a few years later, it seems they are bringing it back. Why? Because now they own the hardware. It’s one thing to tag a photo on a screen; it’s another thing entirely to have a camera on your face that is constantly analyzing the real world.

The “Super Sensing” Mode

This leak aligns perfectly with earlier reports from The Information, which detailed a “Super Sensing” mode for future Meta wearables.

This mode implies that the cameras are always active, constantly buffering and analyzing the environment to “help” you. If the AI is always watching to see if you need assistance, it is also always watching everyone around you.

The updated privacy policies are already hinting at this. Unless you explicitly turn off the “Hey Meta” voice activation, the microphone and camera systems remain in a semi-active state, waiting for a trigger.

My Perspective: The End of ” polite ignorance”

There is a social contract we all sign when we go outside. If I see you on the subway, I don’t know who you are, and you don’t know who I am. That “polite ignorance” is what makes living in a crowded city bearable.

Meta’s “Name Tag” threatens to tear that contract up.

I love technology. I love the idea of an AI whispering, “That’s your old boss, Steve, don’t forget his name.” That is useful. But the line between “useful assistant” and “creepy stalker tool” is razor-thin.

If this feature launches, it forces every single one of us to make a choice:

- Lock down our profiles: Make everything private, hiding from the digital world to protect our physical privacy.

- Accept the Panopticon: Accept that if we are in public, we are being scanned, identified, and cataloged by anyone wearing a pair of Ray-Bans.

What Should You Do?

For now, this is still in the “internal planning” stage. But knowing Meta, “planning” usually means “coming soon.”

My advice? Start treating your public social media profiles as if they are tattooed on your forehead. Because if Meta gets its way, they soon will be.

I want to know where you stand. Is this a convenient feature you would use to remember names, or is this the final nail in the coffin for personal privacy?

Tell me in the comments below!