Commencing today, Meta’s AI is set to respond to inquiries using up-to-the-minute data. This functionality will extend to the Meta smart glasses under the Ray-Ban brand.

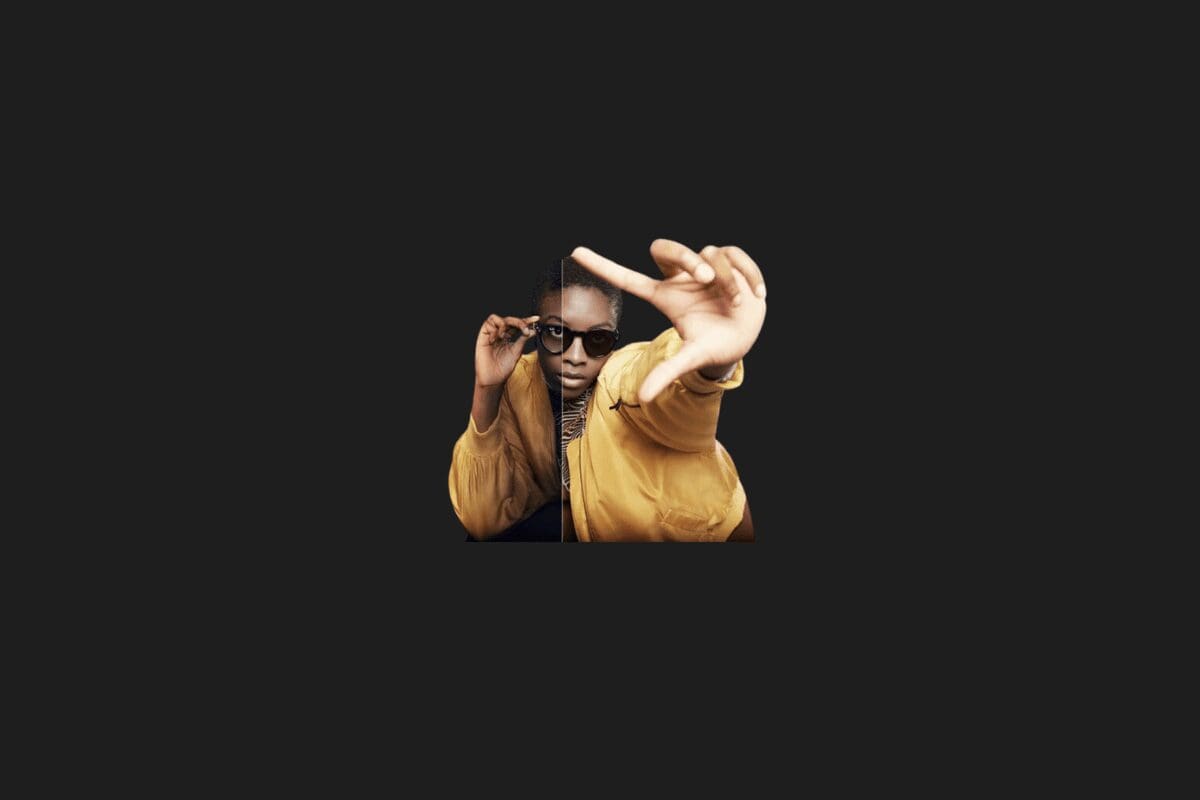

In a collaborative effort, Ray-Ban and Meta have introduced smart glasses, with a forthcoming update that incorporates a significant enhancement.

Through this update, the artificial intelligence developed by the social media giant will provide answers utilizing real-time information. Moreover, Meta plans to initiate testing of “multimodal” capabilities, allowing the AI to offer recommendations based on the individual’s surroundings.

Now, Meta’s artificial intelligence will be able to answer current questions

Since December 2022, Meta AI had limited its artificial intelligence’s access to certain information, leading to constraints in assisting users with real-time queries on topics such as current events, sports results, traffic conditions, or weather.

According to Meta CTO Andrew Bosworth, a shift is underway, particularly benefiting users of Meta smart glasses in the United States. These users are expected to gain access to real-time information, with partial utilization of Bing for this purpose.

Furthermore, Meta is actively developing a multi-modal artificial intelligence system. Unveiled at the Connect event, this feature will empower Meta’s AI to respond to users’ contextual questions about their surroundings. For instance, it will be capable of identifying and providing information about objects like fruits when asked, “What is this fruit?”

While this update is not yet available to the general public, those interested in trying out the new features can anticipate access in 2024. The outcome of Meta’s efforts in this domain, where it has faced challenges in the past, remains to be seen.