Google and Meta Declare War on Nvidia’s AI Software Dominance

Google is launching a new initiative called TorchTPU to strengthen PyTorch compatibility on TPU chips. The project, conducted in collaboration with Meta, directly challenges Nvidia‘s software dominance.

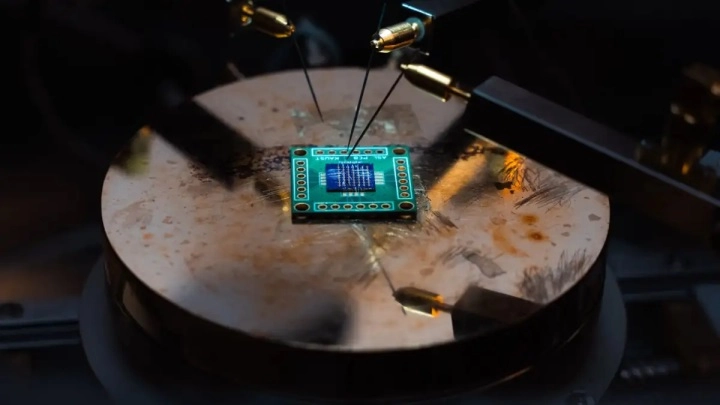

Alphabet‘s Google is taking a new and strategic step aimed at weakening Nvidia‘s long-standing software superiority in the artificial intelligence hardware market. According to sources close to the company, Google is working on a new initiative that will allow PyTorch, the world’s most widely used AI software framework, to run much more efficiently on its own AI chips, Tensor Processing Units (TPU). Internally dubbed “TorchTPU,” this project signifies a direct challenge to the strong dominance Nvidia has established with both its hardware and software ecosystem.

TorchTPU Coming as an Alternative

This move is seen as a key part of Google’s plan to make TPUs a real alternative to Nvidia’s market-leading GPUs. Recently, TPU sales have transformed into a critical growth engine for Google Cloud revenues. Google wants to show investors that billions of dollars in AI investments are providing tangible returns. However, the company is aware that offering powerful hardware alone is not enough. The vast majority of AI developers have built their existing infrastructures on PyTorch, making software compatibility a deciding factor.

The primary goal of TorchTPU is to eliminate the biggest obstacles developers using PyTorch face when switching to TPUs. According to sources, Google aims to minimize additional engineering costs in existing projects by making TPUs fully compatible with PyTorch and developer-friendly. It is also stated that the company is considering making parts of the software open source to accelerate adoption. Compared to previous PyTorch support initiatives, it is reported that TorchTPU is being attributed much more resources, organizational priority, and strategic importance.

Meta Was Behind PyTorch

PyTorch is an open-source project launched in 2016 and has become the de facto standard for AI developers today, especially with intense support from Meta. Very few developers in Silicon Valley write code from scratch to run directly on Nvidia, AMD, or Google chips. Instead, they rely on tools like PyTorch that offer ready-made libraries and frameworks. The CUDA software ecosystem, seen as one of Nvidia’s biggest advantages, has been deeply integrated with PyTorch over the years and optimized to deliver maximum performance on Nvidia chips.

The picture is different on the Google front. For many years, the company has used a different machine learning framework called Jax for its internal developments, and TPUs rely on a compiler infrastructure called XLA to run code efficiently. Since most of Google’s AI software stack and performance optimizations are shaped around Jax, a serious gap has emerged between PyTorch, which customers prefer, and Google’s own approach. This stands out as one of the main factors slowing down the widespread adoption of TPUs. If TorchTPU succeeds, switching costs are expected to drop significantly for companies looking for alternatives to Nvidia GPUs. Nvidia’s market dominance stems not only from powerful hardware but also from CUDA being deeply embedded in PyTorch. This ecosystem has long become the default standard for training and running large AI models.

Google is working in close collaboration with Meta, the creator and main supporter of PyTorch, to accelerate this process. It is stated that the two companies are also discussing agreements that would provide Meta with access to more TPUs. For Meta, this collaboration aligns with its interests. The company wants to reduce inference costs and ensure infrastructure diversity by reducing its dependence on Nvidia GPUs. In this way, it aims to increase its bargaining power against Nvidia.