The Age of the Scalable Quantum Computer is Now Very Close

IBM and Google aim to make quantum computers scalable within this decade. New technologies promise computation beyond supercomputers with millions of qubits.

Since the 1980s, physicists and computer scientists have been trying to develop quantum computers. Recent technological breakthroughs indicate that the fruits of these efforts are now close to being harvested. At this stage, tech companies are announcing their plans to take quantum machines from an experimental level and turn them into large-scale systems capable of solving problems beyond today’s supercomputers.

The Goal is to Scale Within This Decade

Leading firms like IBM and Google have updated their roadmaps for scalable quantum computers. IBM announced that its published roadmap addresses some of the most difficult technical challenges in the field and that the upcoming period could be decisive for the industry. In an interview, Jay Gambetta, the leader of IBM’s quantum initiatives, said, “It doesn’t feel like a dream anymore. I truly feel that we’ve cracked the code and can build this machine by the end of the decade.”

However, the path is still fraught with serious engineering challenges. Although researchers have solved fundamental physics problems, manufacturers still face complex issues that require a massive engineering effort. Oskar Painter, who leads quantum hardware development at Amazon Web Services, stated that building a practical quantum computer could take 15 to 30 years.

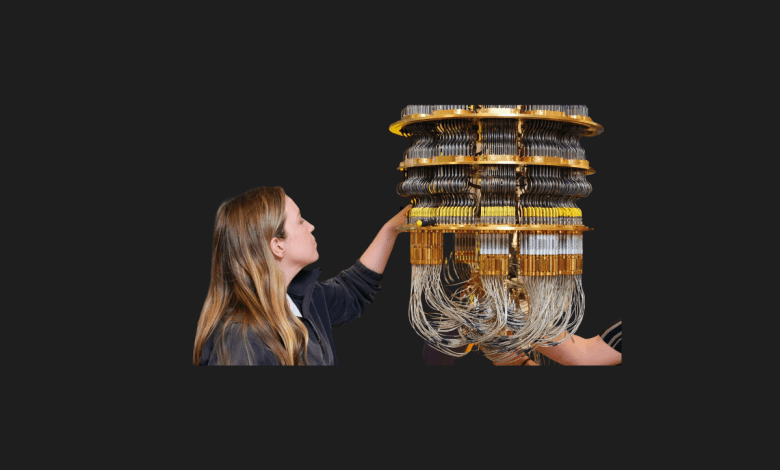

Today’s quantum prototypes typically use fewer than 200 qubits. However, industrially meaningful machines will require millions of qubits. The problem is that qubits can only maintain their quantum states for a very small fraction of a second. As more qubits are added, the noise and interactions in the system increase, making reliable computation increasingly difficult. IBM’s 433-qubit Condor chip highlighted this issue. The scale of the chip increased inter-component interactions, negatively affecting performance. IBM responded to this problem by redesigning the connections between the chips.

Google, on the other hand, aims to reduce component costs for a full-scale quantum computer by a factor of ten and is planning a budget of $1 billion. The key element for scalability is quantum error correction methods. These systems tolerate errors that can be caused by imperfect qubits. Data is distributed across multiple qubits to reduce the probability of errors. Julian Kelly, the hardware lead at Google Quantum AI, notes that scaling systems too early can lead to wasted resources, noisy outputs, and high engineering costs.

Google has developed a quantum chip that implements error correction at increasing scales. This chip uses a technique called “surface code,” where qubits are connected in a two-dimensional grid. Making meaningful calculations with this structure requires millions of qubits. In contrast, IBM is working on low-density parity-check (LDPC) codes, which could reduce the need for quantum bits by about 90% but rely on complex long-range connections.

The most notable advancements are coming from superconducting circuit qubits, which are used in IBM and Google’s machines. These systems operate at temperatures near absolute zero and are quite difficult to control. Other approaches include trapped ions, neutral atoms, and photons. While these methods are more stable, scaling and integrating a large number of qubits remains a major engineering challenge.

Besides IBM and Google, companies like Amazon and Microsoft are researching exotic states of matter to develop more reliable qubits. Although these next-generation technologies are still in their early stages, it is suggested that they could surpass today’s quantum machines in the future.

The growing interest in quantum computers has also started to attract the attention of investors and government agencies. Last year, the Pentagon’s advanced research unit, DARPA, launched a review to identify quantum technologies with the potential for rapid industrial application, allowing leading companies to test which approaches can deliver practical and scalable systems.