Helix 02: Figure AI Just Changed the Rules of Robotics

I watch a lot of robot videos. It’s part of the job. Usually, I see two things: either a robot doing parkour (impressive but not practical for my kitchen) or a robot folding a shirt so slowly that I could finish the laundry for the whole neighborhood before it’s done.

But yesterday, Figure AI dropped something different. They introduced Helix 02, and for the first time in a long while, I felt the ground shift under my feet.

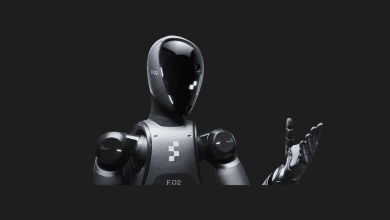

This isn’t just another hardware upgrade. This is a fundamental rewrite of how robots think. We aren’t looking at a machine following a script anymore; we are looking at a machine that is learning to move, see, and act like us.

Here is my deep dive into Helix 02 and why I believe the era of “hard-coded” robots is officially over.

The “System 0” Revolution

The headline here isn’t the metal skin or the battery life. It’s the brain. Figure AI calls it “System 0.”

To understand why this is a big deal, you have to understand how robots usually work. Traditionally, engineers write thousands of lines of code for every specific action. “If camera sees handle, move arm X degrees, close gripper Y force.” It’s rigid. It’s brittle.

Helix 02 throws that out the window.

- The Neural Network: The robot uses a single, massive neural network that controls its entire body.

- End-to-End Control: It takes in visual data (what it sees) and directly outputs motor actions (movement). There is no “translation” layer in the middle.

- The Code Purge: Figure AI claims this approach allowed them to replace over 109,000 lines of explicit C++ code with this single learning system.

I find this fascinating because it mirrors human biology. When you reach for a coffee cup, you don’t calculate the trajectory in C++; your brain just maps the visual goal to a muscle movement. Helix 02 is finally doing the same.

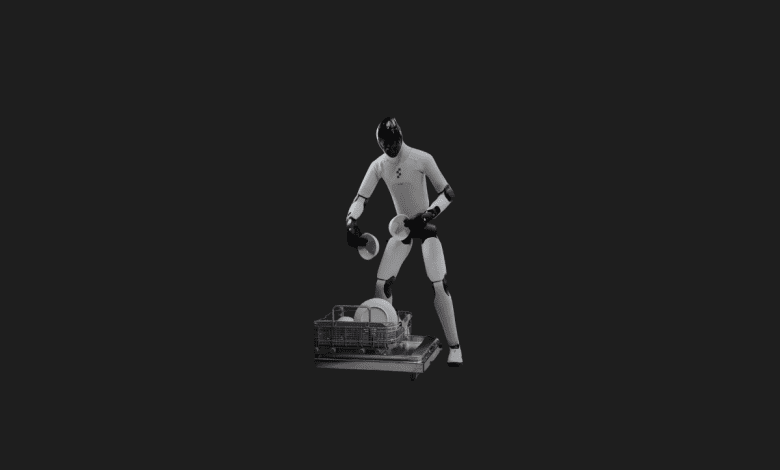

The Ultimate Test: The Dishwasher

Figure AI showed off Helix 02’s capabilities with a task that sounds mundane but is actually a robotics nightmare: The Dishwasher.

In a continuous, uncut demonstration lasting about four minutes, Helix 02 navigated a full-sized kitchen, opened a dishwasher, unloaded it, and reloaded it.

Why is this impressive?

- Reflections & Transparencies: Dishwashers are full of shiny metal, glass, and wet surfaces. These wreak havoc on traditional Lidar or depth sensors. Helix 02 handled the visual noise perfectly using visual-only networks.

- No Interventions: The company claims this was fully autonomous. No teleoperation (human remote control), no cuts.

- Complexity: It wasn’t just moving an object from A to B. It was interacting with a hinged door, sliding racks, and fragile objects.

I’ve seen robots stack boxes in structured warehouses, but a kitchen is a chaotic, unstructured environment. To see a robot handle that chaos for four minutes straight without freezing up is a massive leap forward.

Solving the “Loco-Manipulation” Puzzle

There is a term in robotics called “Loco-manipulation.” It refers to the ability to walk (locomotion) and use your hands (manipulation) at the same time.

Try walking while threading a needle or carrying a brimming cup of coffee. Your body naturally stabilizes your arms while your legs move. For robots, this is incredibly hard. Usually, they stop, stabilize, do the task, and then move again.

Helix 02 breaks this barrier. Because “System 0” controls the whole body as one unit, the walking and the hand movements are synchronized.

- Proprioception: This is the body’s ability to know where it is in space. Helix 02 fuses data from vision and its internal sensors to move fluidly.

- Efficiency: By moving and working simultaneously, it drastically reduces the time it takes to complete tasks.

Surgeon-Level Precision

It’s not just about heavy lifting. The sensory upgrade on this machine is startling.

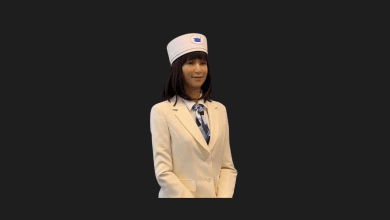

Helix 02 is equipped with palm cameras and advanced tactile sensors (likely on the Figure 03 platform architecture). This allows for what I call “micro-manipulation.”

In the demos, we saw it:

- Sorting Pills: Separating individual pills requires immense dexterity.

- Handling Syringes: This implies a level of pressure sensitivity that could eventually be trusted in healthcare settings.

- Picking Chaos: Selecting small, irregular metal parts from a disorganized bin (“bin picking” is a classic robot test).

This tells me that Figure AI isn’t just targeting the warehouse floor. They are looking at hospitals, laboratories, and eventually, our homes.

How Did They Teach It?

This is the part that scares some people and excites others (including me). They didn’t “program” Helix 02 in the traditional sense. They “raised” it.

The training data comes from 1,000+ hours of human motion data. Humans performed these tasks wearing motion-capture gear, and the AI studied the correlation between what the human saw and how they moved.

They combined this with Reinforcement Learning in simulation. Basically, the robot practices in a virtual world millions of times, getting “rewarded” for success and “punished” for dropping the virtual plate, before the software is ever uploaded to the physical robot.

My Final Take

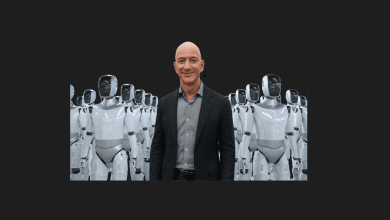

We are witnessing the “ChatGPT moment” for physical robotics. Just as Large Language Models (LLMs) moved us away from rigid chatbots to fluid conversation, Large Action Models like System 0 are moving us from rigid automation to fluid physical intelligence.

Helix 02 proves that we don’t need more code; we need better neural networks.

I have to ask you: Seeing a robot handle a syringe or sort pills requires a massive amount of trust. Would you be comfortable letting Helix 02 organize your medicine cabinet, or is that too much trust in an AI “black box”? Let’s discuss it in the comments.