Microsoft Azure Maia 200: A Game Changer for the AI Infrastructure

I’ve been tracking the “chip wars” for a while now, and honestly, Microsoft just threw a massive wrench into the status quo. While everyone has been staring at NVIDIA, Microsoft quietly went into the lab and cooked up the Azure Maia 200. After digging into the specs, I realized this isn’t just a minor upgrade; it’s a clear statement that Microsoft wants to own the entire AI stack, from the software you use to the silicon that powers it.

I find it fascinating that we are moving away from “one-size-fits-all” hardware. The Maia 200 is built specifically for inference—the part where the AI actually “thinks” and answers your prompts—and that is where the real battle for efficiency is won.

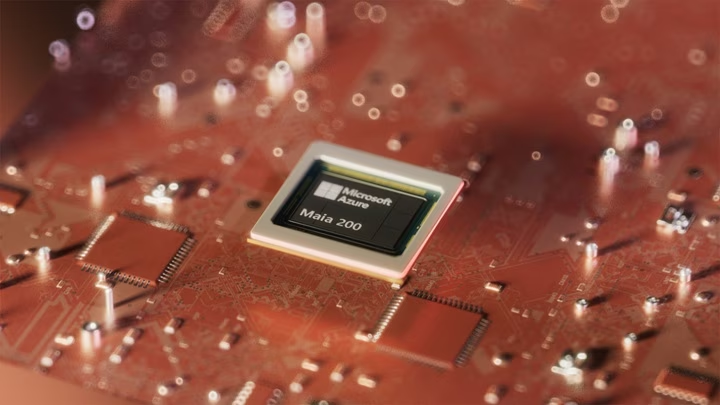

What Makes Maia 200 a Beast?

When I looked at the technical sheet, the first thing that jumped out at me was the memory. We are talking about 216 GB of HBM3e memory. To put that into perspective for you, that’s a massive amount of high-speed “brain space” for an AI to juggle complex data without breaking a sweat.

Here’s the breakdown of why this chip is making the industry nervous:

- Massive Transistor Count: Built on TSMC’s 3nm process, it packs roughly 140 billion transistors. The density here is mind-blowing.

- Performance Leaps: It offers up to 10 petaflops in FP4 calculations. When I compared this to Amazon’s Trainium3, it’s nearly three times faster.

- Efficiency is King: Microsoft claims it delivers 30% better performance per dollar than the previous generation. In the world of massive data centers, that 30% saves billions.

- Insane Bandwidth: With a memory bandwidth of 7 TB/s, data moves through this chip like a Formula 1 car on an open track.

Why This Actually Matters to You

You might think, “Ugu, this is just server stuff, why should I care?” But here is the catch: this chip is what will actually run GPT-5.2 and the next versions of Microsoft 365 Copilot.

When I’m using AI, I want it to be instant. I don’t want to wait for a loading bar. By building their own chips, Microsoft can optimize exactly how their models run. It’s the “Apple approach”—designing the hardware and software together to get a seamless experience.

It’s already live in the US Central Azure data center, so if you’ve noticed your Copilot responses getting snappier lately, you might have the Maia 200 to thank.

My Take: The End of the NVIDIA Dependency?

I don’t think Microsoft is going to stop buying NVIDIA chips tomorrow, but they are definitely building a “Plan B.” By creating a heterogeneous environment where Maia 200 handles specific inference tasks, they reduce their reliance on supply chains they don’t control.

What I find most impressive is the FP4 and FP8 optimization. It shows they aren’t trying to build a chip that does everything; they are building a chip that does AI inference better than anyone else. It’s surgical, it’s efficient, and it’s very smart.

I’m curious to see how Google and AWS respond to this, because the bar for “custom silicon” just got pushed significantly higher.

If you could choose between a faster AI that costs more or a slightly slower AI that is significantly cheaper to run, which one would you prefer for your daily tasks?