Giving Robots the Sense of Touch: Ensuring Technology’s Breakthrough

I’ve spent a lot of time this week thinking about what actually separates us from the machines we build. We’ve given them eyes through high-res cameras, ears through sensitive microphones, and even a “brain” through LLMs. But until now, robots have been essentially numb. They could pick up a glass of water, but they couldn’t feel if it was slipping or how cold the condensation was.

That changed for me at CES 2026. While everyone was crowded around the flashy humanoid demos, I spent some time with a company called Ensuring Technology. They’ve unveiled something that I believe is the “missing link” in robotics: a synthetic skin that actually mimics human touch.

Why “Feeling” is the Final Frontier

Think about it—as humans, we don’t just “see” the world; we feel our way through it. When you grab a ripe peach, your brain receives instant feedback about its softness and texture so you don’t crush it. Robots, despite their advanced AI, have historically struggled with this. They rely on visual data and torque sensors, which is like trying to perform surgery while wearing thick oven mitts.

I’ve always felt that for a robot to truly integrate into our homes—to help with the dishes or care for the elderly—it needs to understand pressure, texture, and contact. Ensuring Technology’s new “Electronic Skin” (e-skin) aims to solve exactly that.

Tacta: The Fingertip Revolution

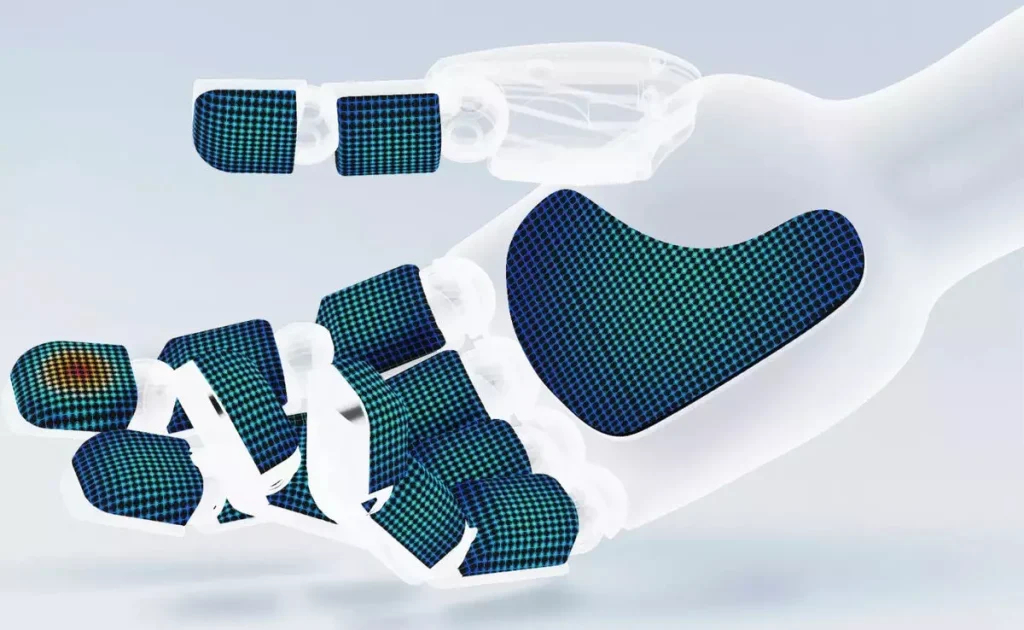

The first product they showed me was Tacta. This is a multi-dimensional tactile sensor specifically designed for robotic hands and fingertips.

I dug into the specs, and honestly, the precision is staggering. Here is what makes Tacta different from anything I’ve seen before:

- Sensing Density: It features 361 sensing elements per square centimeter. To put that in perspective, that’s roughly equivalent to the sensitivity of a human fingertip.

- High-Speed Sampling: The data is sampled at 1000 Hz. This means the robot isn’t just “feeling” once; it’s getting a continuous stream of data, allowing it to react to a slip in less than a millisecond.

- Edge Computing: Despite being only 4.5 mm thick, the sensor includes the sensing layer, data processing, and edge computing in a single module. No bulky external processors needed.

Watching a robotic hand equipped with Tacta pick up a grape without bruising it, and then immediately switch to holding a heavy metal tool, was a “wow” moment for me. It’s not just about strength anymore; it’s about finesse.

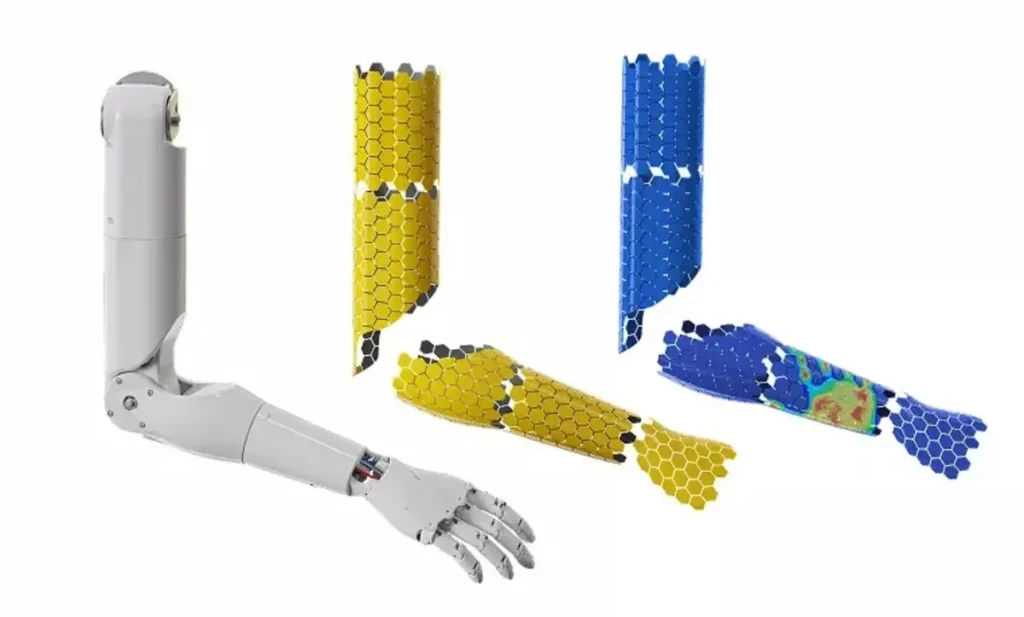

HexSkin: Wrapping the Humanoid Form

While Tacta handles the fine motor skills, HexSkin is designed for the rest of the body. I’ve always wondered how we’d make robots safe to be around. If a 150-kg metal humanoid bumps into you, it needs to know immediately that it has made contact.

HexSkin uses a brilliant hexagonal, tile-like design. This modular approach allows the skin to be wrapped around complex, curved surfaces—like a robot’s forearm, chest, or legs.

- Scalability: Because of the hexagonal grid, it can cover large areas without losing sensitivity.

- Collision Awareness: This allows the robot to have “whole-body” awareness. If someone taps a robot on the shoulder, it doesn’t need to see them to know they are there.

Why This Matters for the Metaverse and Beyond

You might be wondering why a “Metaverse” brand is covering physical robotic skin. For me, the answer is simple: the line between the digital and physical is blurring. We are moving toward a world of Telepresence.

Imagine wearing a haptic suit in the Metaverse while controlling a robot in the real world. If that robot has Tacta skin, you could theoretically “feel” the texture of a fabric or the heat of a cup of coffee from thousands of miles away. This is the hardware that will eventually bridge that gap.

I also think about the safety aspect. I’ve been a bit skeptical about “home robots” because of the physical risk. But a robot that can feel a child’s hand or a pet’s tail in its path is a robot I’d actually trust in my living room.

My Take: The Uncanny Valley of Touch

There’s something slightly eerie about a robot having “human-like” touch. We’ve spent so long looking at robots as cold, hard machines. Giving them skin—even if it’s synthetic—makes them feel much more “alive.”

As I watched the Ensuring Technology demo, I realized we are rapidly closing the gap. With Nvidia’s Rubin architecture providing the processing power and Ensuring’s e-skin providing the sensory input, the “Terminator” or “I, Robot” future is looking less like fiction and more like a scheduled product release.

Wrapping Up

The breakthrough from Ensuring Technology proves that the next stage of AI isn’t just about better algorithms; it’s about better embodiment. We are moving from AI that thinks to AI that feels.

I’m curious what you think about this. If we give robots the ability to feel pain or soft textures, does that change how you view them? Would you feel more comfortable having a robot in your home if you knew it had a “human-like” sense of touch, or does that make them a little too realistic for your liking?

Let me know your thoughts—I’m genuinely curious if this crosses a line for you or if it’s the upgrade you’ve been waiting for.