AWS has introduced the new Trainium3 chip. The new chip offers a 4x increase in performance and memory. Trainium4 will be compatible with Nvidia GPUs and will be used in large AI clusters.

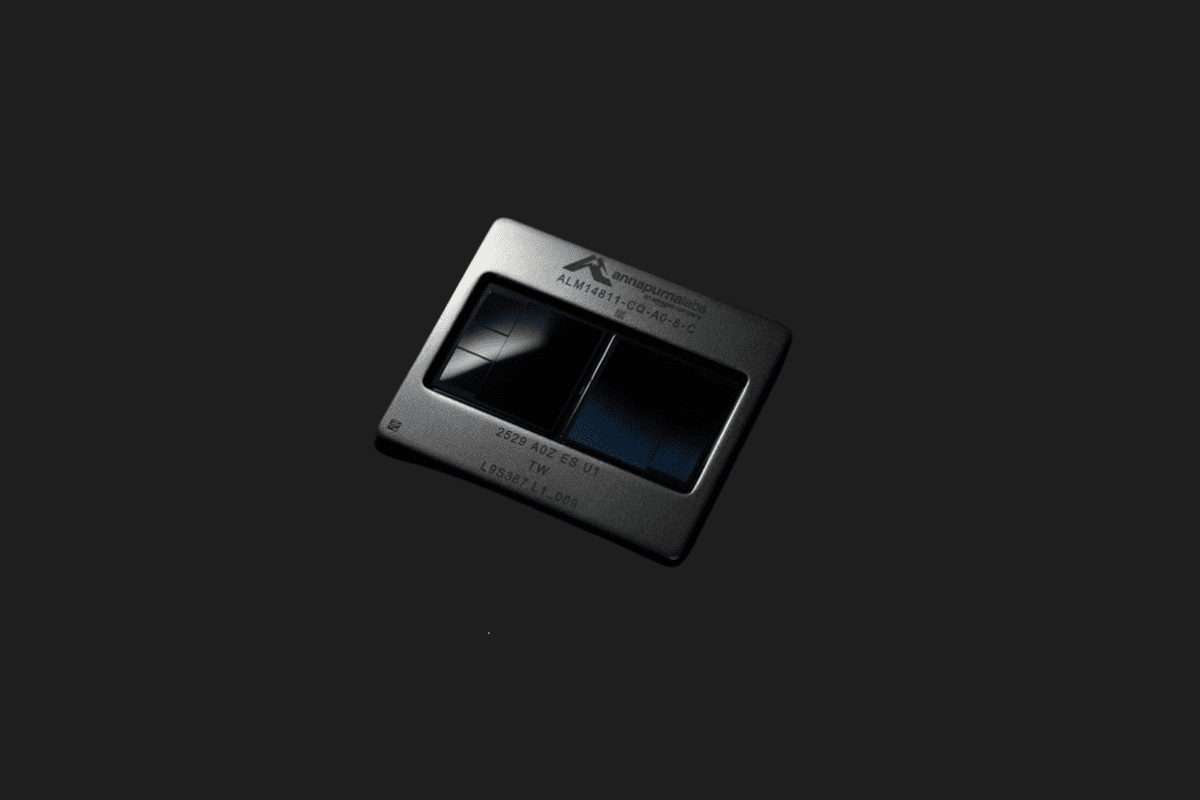

Amazon Web Services (AWS) continues its long-developed series of AI training chips with Trainium3. At the re:Invent 2025 event, AWS introduced the new training processor Trainium3 and the large-scale Trainium3 UltraServer system. The company also confirmed that its next-generation processor, Trainium4, is in the development phase.

4x Improvement in Training and Inference

Trainium3 offers a fourfold increase in both training and inference performance, as well as a fourfold increase in memory capacity, compared to its predecessor, Trainium2. Each UltraServer hosts 144 Trainium3 chips, and AWS allows customers to connect thousands of these systems together. In a single deployment at maximum scale, 1 million Trainium3 chips can be used. This represents a tenfold increase compared to the previous generation.

Energy efficiency is another important feature of Trainium3. According to AWS, these systems consume approximately 40 percent less energy while providing higher transaction volume. Early adopters of Trainium3 include Anthropic, the Japanese LLM startup Karakuri, SplashMusic, and Decart. These customers have reported faster inference, faster iteration, and lower billable compute hours for the models they develop.

Trainium4 and Hybrid Ecosystem Strategy

AWS also gave hints about the next-generation Trainium4. Although an official release date was not announced, Trainium4 promises a significant leap in performance, and its most notable feature is Nvidia NVLink Fusion support. This approach shows that AWS positions its hardware not as an alternative to Nvidia solutions, but as a complementary element in a hybrid ecosystem. Thus, companies using CUDA-based applications will be able to integrate AWS proprietary hardware without rebuilding their software stacks.

The AWS and Nvidia partnership covers connectivity technology, data center infrastructure, open model support, and rack-scale AI system deployment. NVLink Fusion support will be integrated into future platforms, including Trainium4, Graviton CPUs, and Nitro virtualization hardware. On the other hand, the company did not share pricing and availability information for the new chips.

You Might Also Like;

- How to Create the Viral Tokyo Drift AI Trend for Free

- How to Make AI Talking Fruit and Vegetable Videos

- NASA Delays Crewed Moon Mission AgaiN